watsonx.ai Pipeline

Overview

This approach relies exclusively on Python and the watsonx.ai SDK to build the solution. Python handles the data preprocessing, transformation, and orchestration of various tasks, while watsonx.ai powers the AI-driven analysis and predictions. Designed for teams seeking to demonstrate the capabilities of generative AI, this solution highlights watsonx.ai's ability to effectively address the use case without requiring extensive integration.

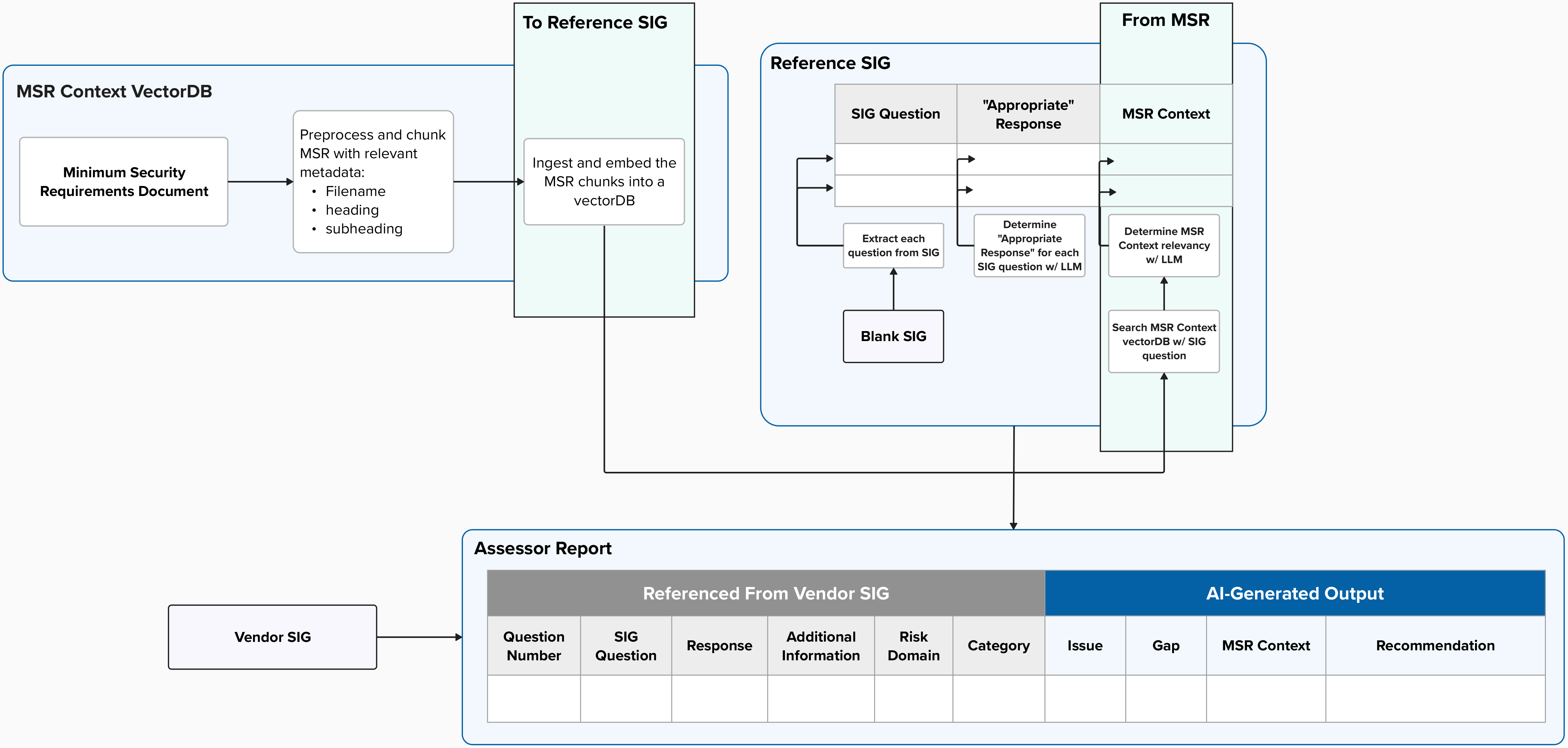

This solution consists of three main components:

- Preprocess and chunk the Minimum Security Requirement Document

- Creating a Reference SIG

- Creating the Assessor Report

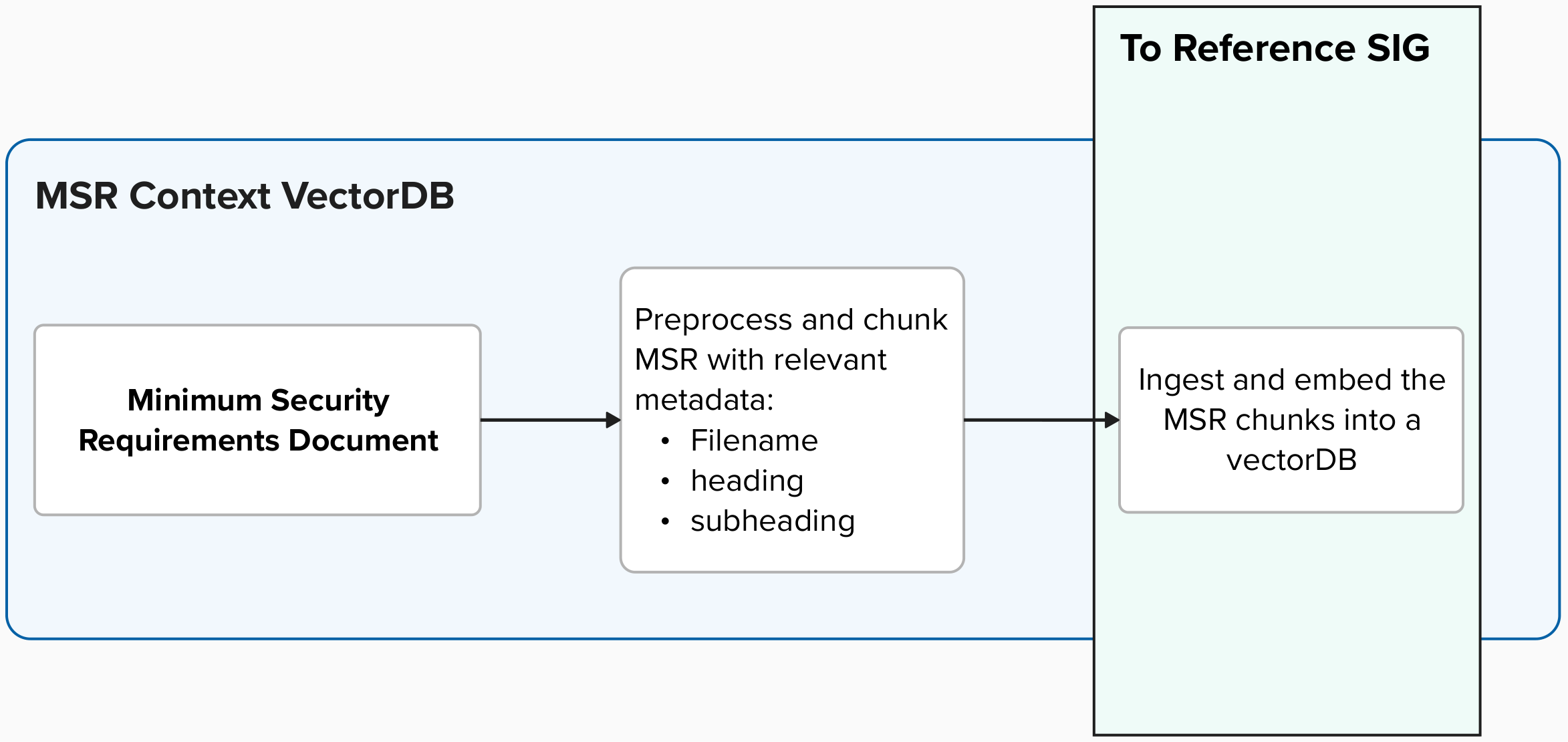

Preprocess Source Documents

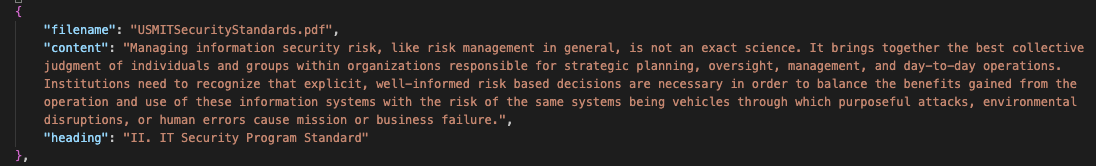

The first step in creating the assesor report was to preprocess the Security Requirements Document into the appropriate "chunks" based off each subheading in the document to ensure accurate metadata and context for each embedding in the vectordb. Once the document has been processed into the "chunked" json, it will then be ingested into the vectordb.

Solution Flow

An example of the preprocessed document into a json file:

Create Reference SIG

A reference SIG is first created before being able to create an accurate assesor report. For each SIG question, the reference SIG will consist of:

- the "right" or most "appropriate" response

- the most relevant MSR context

- the most appropriate Issue information from the given "Issue Catalog"

The Reference SIG will be created once or updated as needed when SIG questions change. This process, which involves most of the LLM-related tasks, establishes the foundation for more efficient and consistent logic-based actions in generating the assessor report by referencing the Reference SIG as the ground truth.

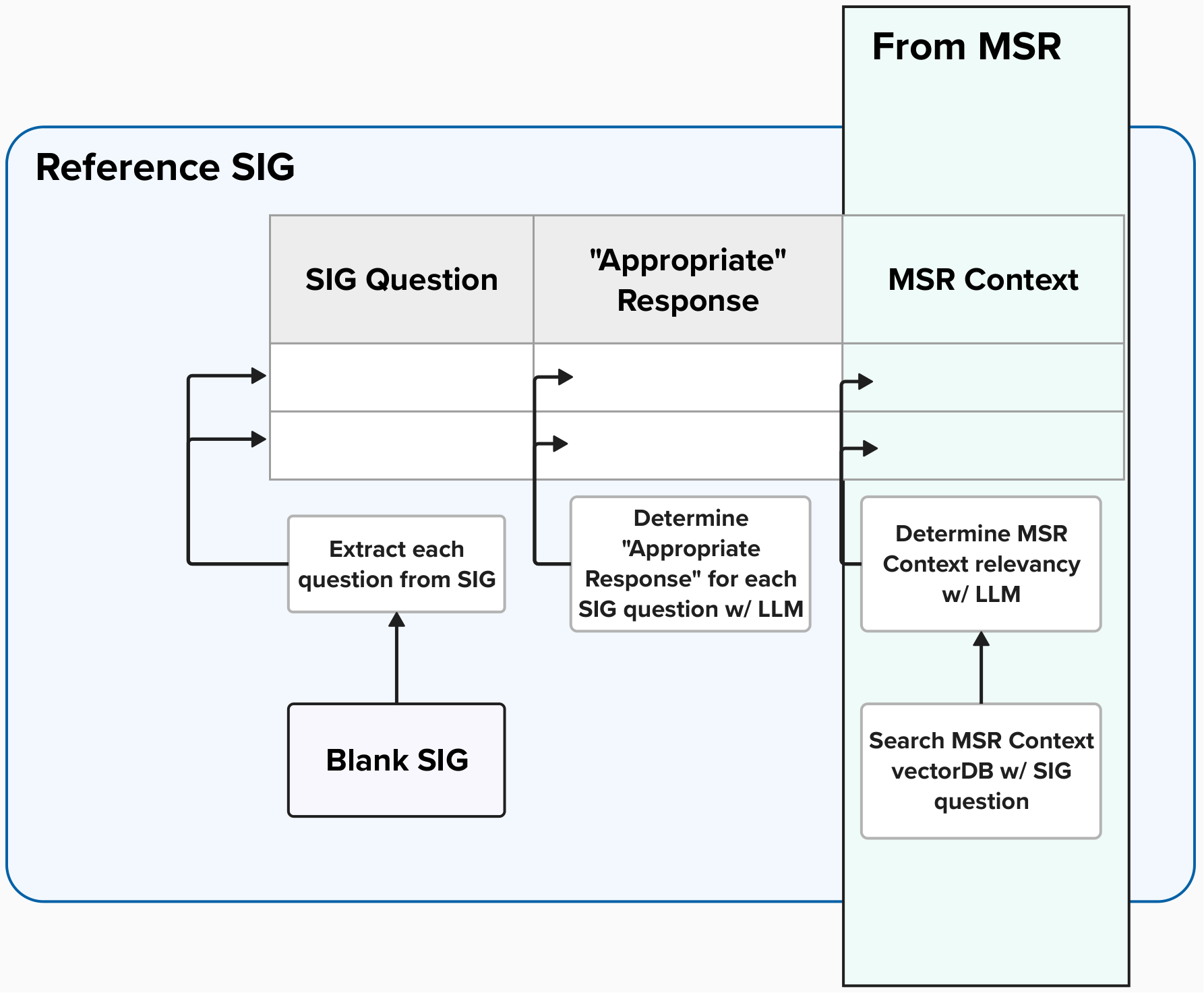

Solution Flow

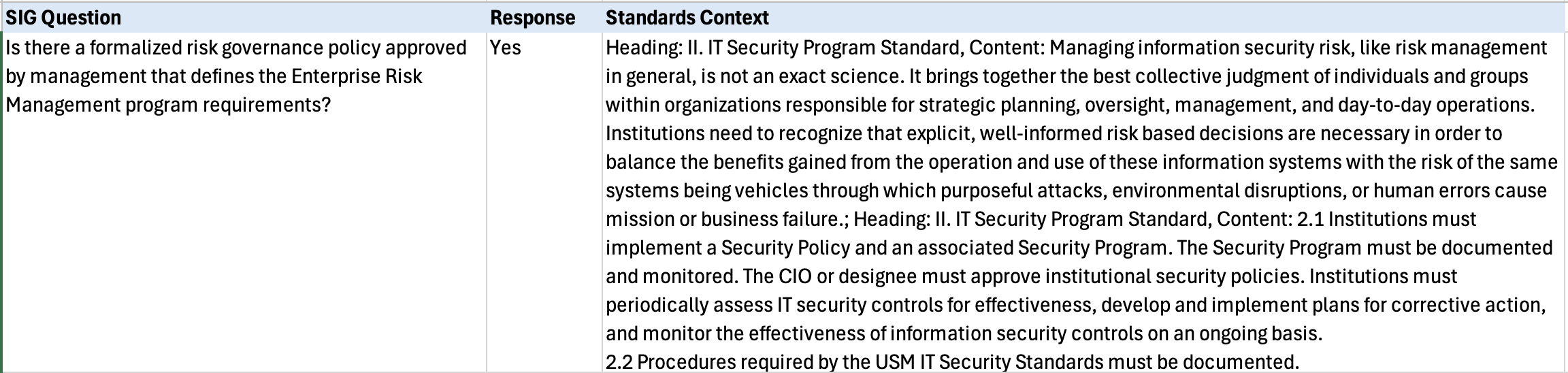

An example of the Reference SIG content:

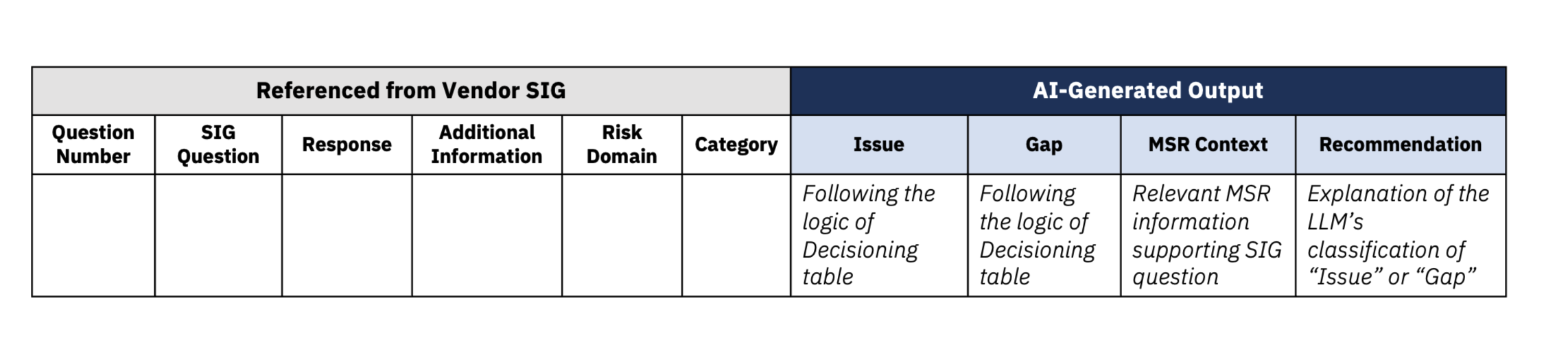

Create Assessor Report

The assessor report can be created once the reference SIG has been generated. The final assessor report consists of ten features pulled from either the orginal vendor SIG or generated by the generative-AI pipeline:

AI-Generated Output

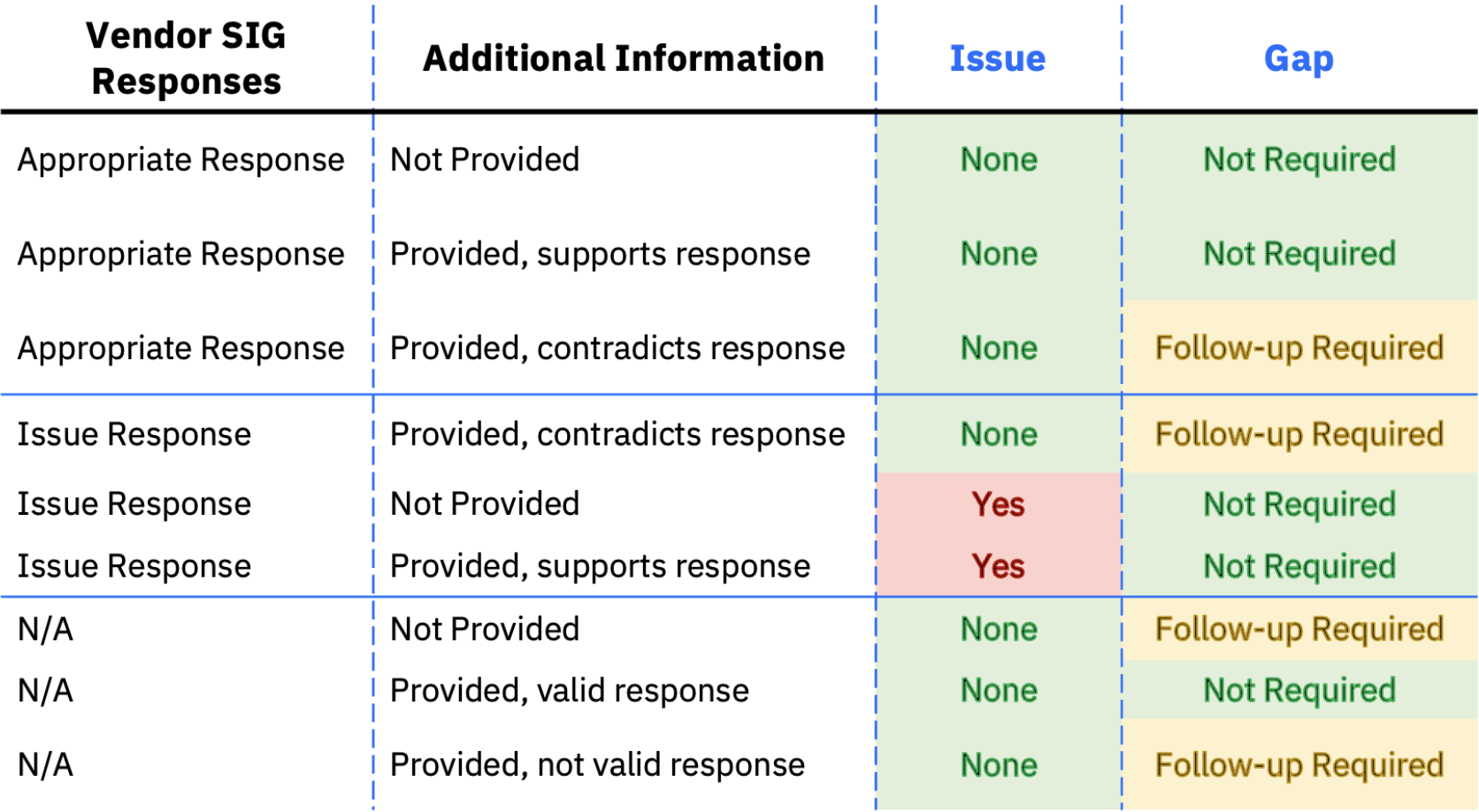

- Issue: classfied by comparing the vendor SIG's response and the Reference SIG's response for the same SIG question.

- Gap: classified by leveraging an LLM to determine whether or not any given "Additional Information" supports or contradicts the give vendor SIG response.

- MSR Context: Search the SIG question against the MSR Context vectorDB and pull the relevant MSR content.

- Reccomendation: Leverage an LLM to provide a reccomentdation for any gaps and/or issue identified.

All AI-generated content was classified based on a decision table, which was used to systematically determine how specific features aligned with predefined criteria, show below: